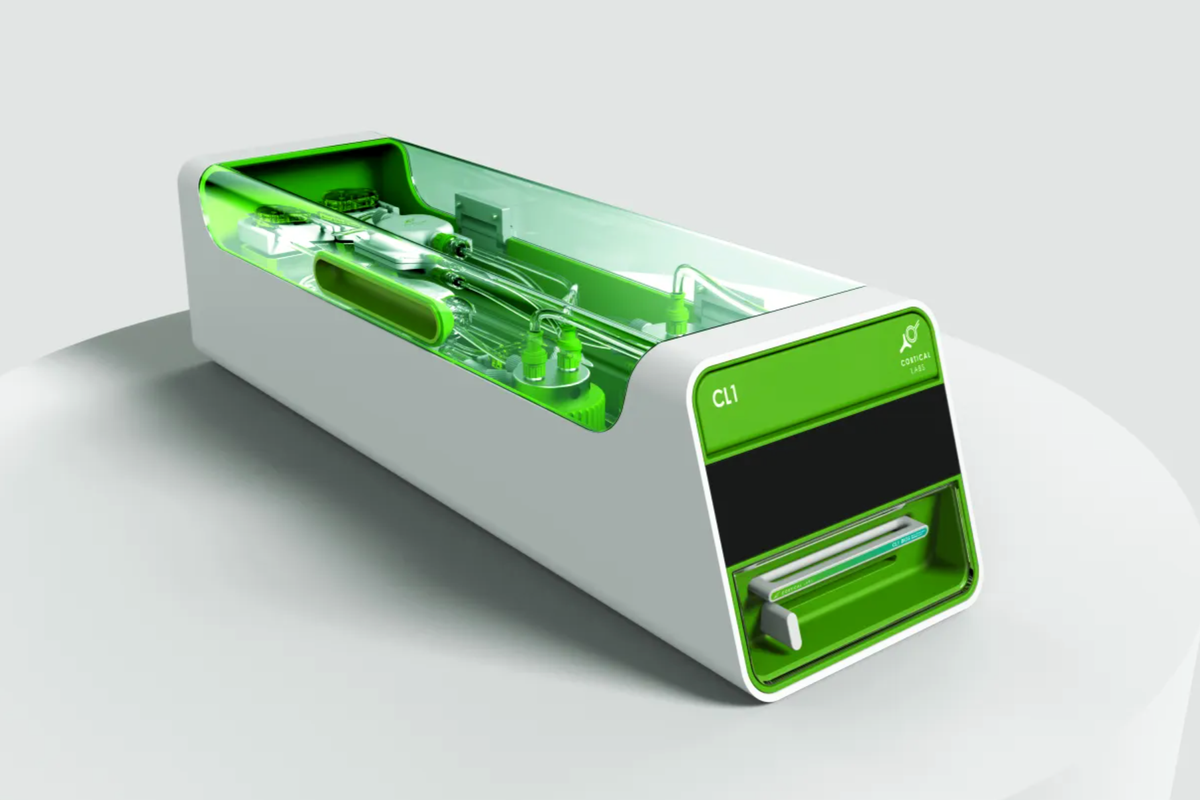

In 2025, computing crossed a historic line. Scientists and engineers introduced CL1, the first commercially usable biological computer — a machine that blends living human neurons with traditional silicon hardware.

Unlike conventional computers that simply execute code, CL1 operates with real brain cells that can learn, adapt, and change over time. This marks the beginning of a new field known as biological computing.

What is CL1?

CL1 is a hybrid computing system created by Cortical Labs. It combines three core technologies:

- Lab-grown human neurons

- Advanced silicon microchips

- Real-time software control systems

The neurons are grown from stem cells and placed on a specially engineered chip. This chip allows electrical signals to move back and forth between software and the living cells, creating a system that behaves more like a tiny biological brain than a normal computer.

How is CL1 Different from Artificial Intelligence?

| Traditional AI | CL1 Biological Computer |

|---|---|

| Uses mathematical models | Uses living neurons |

| Has a fixed digital structure | Neurons can grow and reconnect |

| Learning is simulated | Learning happens biologically |

| High energy usage | Extremely low power consumption |

What Can CL1 Do?

- Learn from rewards and penalties

- React to feedback in real time

- Play simple games such as Pong

- Change behavior over long periods

Why This Matters

- Artificial intelligence

- Brain–computer interfaces

- Neuroscience and drug testing

- Robotics and adaptive systems

- Ultra-low-power computing

Price and Availability

- Physical system price: approximately $35,000 USD

- Available through Neuron-as-a-Service cloud access

Official and Verified Sources

Corticallabs.com livescience.com abc.net yourstory.com